A few months back, I ran out of storage space on my PC. So I bought a new SSD, a nice 1TB NVMe model with an SLC cache. However, I ran into a problem since my old SSD that I still wanted to keep was a SATA III M.2 drive. My motherboard had two M.2 slots, but only one supported SATA III, which was the 4x one. This meant that I was forced to put my new, faster SSD into my lower bandwidth 2x slot, or I would not be able to use both simultaneously. However, I got my hands on one of those PCIe to M.2 adapters, so I started to wonder what would happen to my new SSD’s performance if I used the adapter on several different bandwidth PCIe slots on my motherboard to see how big of an actual impact the bandwidth truly makes on a consumer SSD.

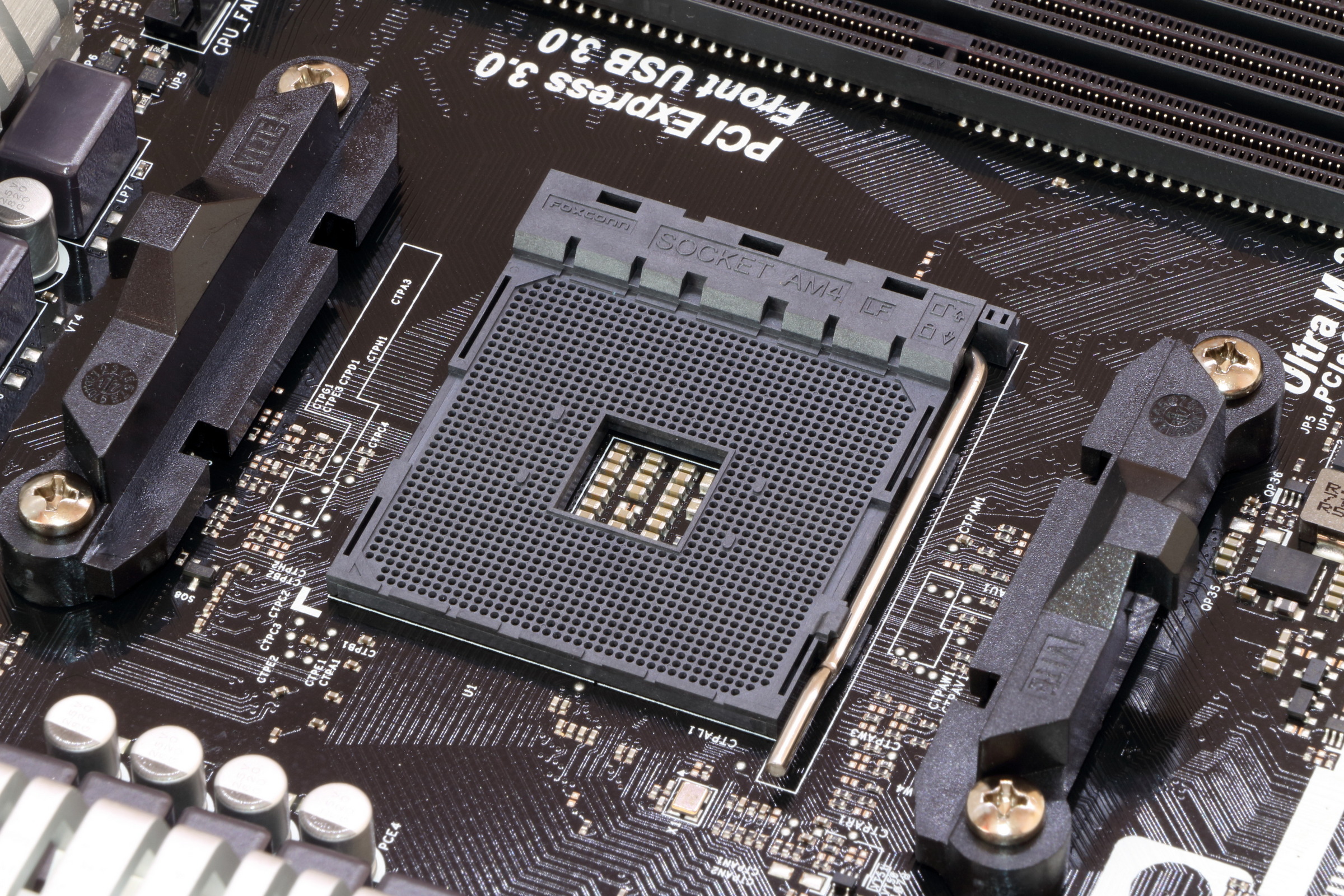

Then, I realized I was dumb and completely forgot that NVMe SSDs cap out at x4 bandwidth anyway, so anything above x4 would be worthless to test. However, GPUs do not cap out at x4 bandwidth. So I thought that I shouldn’t completely scrap the idea and test GPUs instead of SSDs. So, that is what I decided to do. We then realized that a more powerful system would bottleneck much more than a weaker one – so my friend Adam performed all the benchmarks, using his ridiculous 5800x 3090 build, which you can read about here. We tested three games in two different bandwidths (PCIe x16 and x4), both in PCIe 3.0, to understand whether which slot on your motherboard you use genuinely makes a difference in your day-to-day usage.

First of all, in Unigine Heaven, the stress testing tool for GPUs, after running all scenes, here is the data in the x16 3.0 slot:

And here’s data for the x4 3.0 slot:

Immediately, it is easy to see the steady, 30 – 40 FPS decrease in Max FPS when you move your GPU to the x4 slot from the x16 slot. It also makes sense that the Min FPS values seem to be consistent between the two slots, anywhere between 45 – 60 FPS, because by definition, Min FPS values don’t cap out your bandwidth since they represent a minimum. It also makes sense that the decrease in Average FPS is in between the amount that the Min and Max FPS decreased, as it decreased by about 10 for each trial.

There are some surprising differences for Apex Legends, though:

I mentioned earlier how it made sense that Min FPS remained constant between the two sets of benchmarks in Unigine Heaven because they aren’t maxing out the capability of the GPU in that slot, as it’s the minimum frame rate achieved. What is very interesting about Apex Legends is that, for some reason, the x4 Min FPS is visibly lower than the x16 Min FPS for all three trials. This doesn’t make sense since we know that the minimum FPS achieved on the x16 slot is achievable on the x4 slot since the x4 Max FPS is greater than the x16 Min FPS. This also can’t be a fluke since, like I said, it occurred for all three trials. Also, oddly, the Max FPS, which is supposed to be the stat which is the easiest to bottleneck here, didn’t decrease much at all between the x4 and x16 benchmarks. My best guess I have for this is something in the Source engine that works differently than with other applications. Unfortunately, we did not benchmark another source game, so I cannot validate this claim. It is also possible that this PC is being bottlenecked in the x16 slot itself while running Apex Legends, which is why the Max FPS is staying the same across both slots. However, that is unlikely since the Min FPS still decreased, and Unigine Heaven is far easier to run, and it didn’t experience a bottleneck in the x16 slot.

To some extent, Cyberpunk’s benchmarks mirror the same format discussed earlier, as the Min FPS decreased, but far less than the Max FPS and Average FPS.

Overall, it is undoubtedly true that the slot bandwidth makes a difference in the performance of GPU demanding applications – but we already knew that. There were primarily two things that surprised me, which were: A. The frame rate decreased more than I thought when switching slots and B. In Apex Legends, the Min FPS dropped more than the Max FPS. Extremely bizarre. Again, my best theory is that it is because of the Source engine, but that is nothing more than a weak inference.

Anyway, the lesson is, make sure your GPU is in your x16 slot. Unless you have a motherboard with only one, and you are using NVLink or something like that. In which case, well, get a therapist.